SSS Performance Test Specification Coming Soon

A new Performance Test Specification (PTS) for solid state storage is about to be released for Public Technical Review by the SNIA SSSI and SSS TWG. The SNIA PTS is a device level performance specification for solid state storage testing that sets forth standard terminologies, metrics, methodologies, tests and reporting for NAND Flash based SSDs. SNIA plans to release the final PTS v 1.0 later this year as a SNIA architecture tracking for INCITS and ANSI standards treatment.

Why do we need a Solid State Storage Performance Test Specification?

Lack of Industry Standards / Difficulty in Comparing SSD Performance

There has been no industry standard test methodology for measuring solid state storage (SSS) device performance. As a result, each SSS manufacturer has utilized different measurement methodologies to derive performance specifications for their solid state storage (SSS) products. This made it difficult for purchasers of SSS to fairly compare the performance specifications of SSS products from different manufacturers.

The SNIA Solid State Storage Technical Working Group (SSS TWG), working closely with the SNIA Solid State Storage Initiative (SSSI), has developed the Solid State Storage Performance Test Specification (SSS PTS) to address these issues. The SSS PTS defines a suite of tests and test methodologies that effectively measure the performance characteristics of SSS products. When executed in a specific hardware/software environment, SSS PTS provides measurements of performance that may be fairly compared to those of other SSS products measured in the same way in the same environment.

Key Concepts

Some of the key concepts of the PTS include proper pre test preparation, setting the appropriate test parameters, running the prescribed tests, and reporting results consistent with PTS protocol. For all testing, the Device Under Test (DUT) must first be Purged (to ensure a repeatable test start point), preconditioned (by writing a prescribed access pattern of data to ensure measurements are taken when the DUT is in a steady state), and measurements taken in a prescribed steady state window (defined as a range of five rounds of data that stay within a prescribed excursion range for the data averages).

Standard Tests

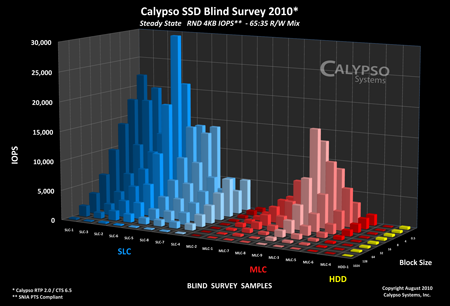

The PTS sets forth three standard tests for client and enterprise SSDs: IOPS, Throughput and Latency and measured in IOs per second, MB per second and average msec. The test loop rounds consist of a Random data pattern stimulus in a matrix of R/W mixes and Block Sizes at a prescribed demand intensity (outstanding IOs – queue depth and thread count). The user can extract performance measurements from this matrix that relate to workloads of interest. For example, 4K RND W can equate to small block IO workloads typical in OLTP applications while 128K R can equate to large block sequential workloads typical in video on demand or media streaming applications.

Reference Test Environment

The SNIA PTS is hardware and software agnostic. This means that the specification does not require any specific hardware, OS or test software to be used to run the PTS. However, SSD performance is greatly affected by the system hardware, OS and test software (the test environment). Because SSD performance is 100 to 1,000 times faster than HDDs, care must be taken not to introduce performance bottlenecks into the test measurements from the test environment.

The PTS addresses this by setting forth basic test environment requirements and lists a suggested Reference Test Platform in an informative annex. This RTP was used by the TWG in developing the PTS. Other hardware and software can be used and the TWG is actively seeking industry feedback using the RTP and other test environment results.

Standard Reporting

The PTS also sets forth an informative annex with a recommended test reporting format. This sample test format reports all of the PTS required test and result information to aid in comparing test data for solid state storage performance.

Facilitate Market Adoption of Solid State Storage

The SSS PTS will facilitate broader market adoption of Solid State Storage technology within both the client and enterprise computing environments.

SSS PTS version 0.9 will be posted very shortly at http://www.snia.org/publicreview for public review. The public review phase is a 60-day period during which the proposed specification is publicly available and feedback is gathered (via http://www.snia.org/tech_activities/feedback/) across the worldwide storage industry. Upon completion of the public review phase, the SSS TWG will remove the SSS PTS from the web site, consider all submitted feedback, make modifications, and ultimately publish version 1.0 of the ratified SSS PTS.

PTS Press Release……

Watch for the press release on or about July 12, and keep an eye on http://www.snia.org/forums/sssi for updates.