Recently, a question came up in the SNIA Computational Storage Special Interest Group on new developments in a technology called eBPF and how they might relate to computational storage. To learn more, SNIA on Storage sat down with Eli Tiomkin, SNIA CS SIG Chair with NGD Systems; Matias Bjørling of Western Digital; Jim Harris of Intel; Dave Landsman of Western Digital; and Oscar Pinto of Samsung.

SNIA On Storage (SOS): The eBPF.io

website defines eBPF, extended Berkeley Packet Filter, as a revolutionary

technology that can run sandboxed programs in the Linux kernel without changing

kernel source code or loading kernel modules.

Why is it important?

Dave Landsman (DL): eBPF

emerged in Linux as a way to do network filtering, and enables the Linux kernel

to be programmed. Intelligence and

features can be added to existing layers, and there is no need to add

additional layers of complexity.

SNIA On Storage (SOS): What are the elements of eBPF that would be

key to computational storage?

Jim Harris (JH): The key to eBPF is that it is architecturally

agnostic; that is, applications can download programs into a kernel without

having to modify the kernel. Computational

storage allows a user to do the same types of things – develop programs on a

host and have the controller execute them without having to change the firmware

on the controller.

Using a hardware agnostic

instruction set is preferred to having an application need to download x86 or ARM

code based on what architecture is running.

DL: It is much easier to establish a standard

ecosystem with architecture independence. Instead of an application needing to download

x86 or ARM code based on the architecture, you can use a hardware agnostic

instruction set where the kernel can interpret and then translate the

instructions based on the processor. Computational storage would not need to

know the processor running on an NVMe device with this “agnostic code”.

SOS: How has the use of eBPF evolved?

JH: It is more

efficient to run programs directly in the kernel I/O stack rather than have to

return packet data to the user, operate on it there, and then send the data back

to the kernel. In the Linux kernel, eBPF began as a way to capture and filter

network packets. Over time, eBPF use has

evolved to additional use cases.

SOS:

What are some use case examples?

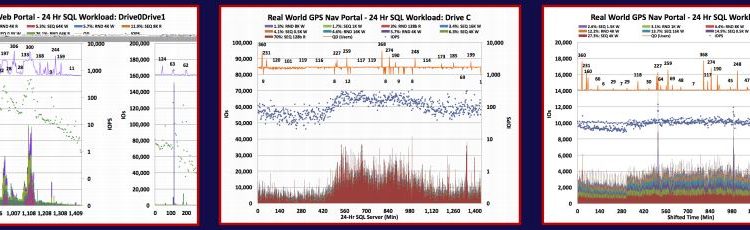

DL: One of the use cases is performance analysis. For

example, eBPF can be used to measure things such as latency distributions for

file system I/O, details of storage device I/O and TCP retransmits, and blocked

stack traces and memory.

Matias Bjørling (MB): Other examples in the Linux kernel

include tracing and gathering statistics.

However, while the eBPF programs in the kernel are fairly simple, and

can be verified by the Linux kernel VM, computational programs are more

complex, and longer running. Thus, there is a lot of work ongoing to explore

how to efficiently apply eBPF to computational programs.

For example, what is the right set of run-time restrictions

to be defined by the eBPF VM, any new instructions to be defined, how to make

the program run as close to the instruction set of the target hardware.

JH: One of the big use cases involves data analytics and

filtering. A common data flow for data analytics are large database table files

that are often compressed and encrypted.

Without computational storage, you read the compressed and encrypted data

blocks to the host, decompress and decrypt the blocks, and maybe do some

filtering operations like a SQL query.

All this, however, consumes a lot of extra host PCIe, host memory, and

cache bandwidth because you are reading the data blocks and doing all these

operations on the host. With computational

storage, inside the device you can tell the SSD to read data and transfer it

not to the host but to some memory buffers within the SSD. The host can then tell the controller to do a

fixed function program like decrypt the data and put in another local location

on the SSD, and then do a user supplied program like eBPF to do some filtering

operations on that local decrypted data.

In the end you would transfer the filtered data to the host. You are doing the compute closer to the

storage, saving memory and bandwidth.

SOS: How does using eBPF

for computational storage look the same? How does it look different?

Jim – There are two parts to this answer. Part 1 is the eBPF instruction set with

registers and how eBPF programs are assembled.

Where we are excited about computational storage and eBPF is that the

instruction set is common. There are already existing tool chains that support

eBPF. You can take a C program and compile it into an

eBPF object file, which is huge. If you

add computational storage aspects to standards like NVMe, where developing a unique

tool chain support can take a lot of work, you can now leverage what is already

there for the eBPF ecosystem.

Part 2 of the answer centers around the Linux kernel’s

restrictions on what an eBPF program is allowed to do when downloaded. For

example, the eBPF instruction set allows for unbounded loops, and toolchains

such as gcc will generate eBPF object code with unbounded loops, but the Linux

kernel will not permit those to execute – and rejects the program. These

restrictions are manageable when doing packet processing in the kernel. The kernel knows a packet’s specific data

structure and can verify that data is not being accessed outside the packet. With computational storage, you may want to

run an eBPF program that operates on a set of data that has a very complex data

structure – perhaps arrays not bounded or multiple levels of indirection. Applying Linux kernel verification rules to

computational storage would limit or even prevent processing this type of data.

SOS: What are some of

the other challenges you are working through with using eBPF for computational

storage?

MB: We know that x86

works fast with high memory bandwidth, while other cores are slower. We have some general compute challenges in

that eBPF needs to be able to hook into today’s hardware like we do for SSDs. What kind of operations make sense to offload

for these workloads? How do we define a

common implementation API for all of them and build an ecosystem on top of it? Do we need an instruction-based compiler, or a

library to compile up to – and if you have it on the NVMe drive side, could you

use it? eBPF in itself is great- but

getting a whole ecosystem and getting all of us to agree on what makes value

will be the challenge in the long term.

Oscar Pinto (OP): The Linux kernel for eBPF today is more

geared towards networking in its functionality but light on storage. That may

be a challenge in building a computational storage framework. We need to think

through how to enhance this given that we download and execute eBPF programs in

the device. As Matias indicated, x86 is great at what it does in the host

today. But if we have to work with smaller CPUs in the device, they may need

help with say dedicated hardware or similar implemented using additional logic

to aid the eBPF programs One question is how would these programs talk to them?

We don’t have a setup for storage like

this today, and there are a variety of storage services that can benefit from

eBPF.

SOS: Is SNIA addressing this challenge?

OP: On the SNIA side we are building on program functions

that are downloaded to computational storage engines. These functions run on the engines which are

CPUs or some other form of compute that are tied to a FPGA, DPU, or dedicated

hardware. We are defining these abstracted functionalities in SNIA today, and the

SNIA Computational

Storage Technical Work Group is developing a Computational

Storage Architecture and Programming Model and Computational Storage APIs to address it.. The latest versions, v0.8 and v0.5, has been

approved by the SNIA Technical Council, and is now available for public

review and comment at SNIA Feedback Portal.

SOS: Is there an eBPF standard? Is it aligned

with storage?

JH: We have a

challenge around what an eBPF standard should look like. Today it is defined in the Linux kernel. But if you want to incorporate eBPF in a

storage standard you need to have something specified for that storage

standard. We know the Linux kernel will

continue to evolve adding and modifying instructions. But if you have a NVMe

SSD or other storage device you have to have something set in stone –the

version of eBPF that the standard supports.

We need to know what the eBPF standard will look like and where will it

live. Will standards organizations need

to define something separately?

SOS: What

would you like an eBPF standard to look like from a storage perspective?

JH – We’d like an eBPF standard that can be used by

everyone. We are looking at how

computational storage can be implemented in a way that is safe and secure but

also be able to solve use cases that are different.

MB: Security will be

a key part of an eBPF standard. Programs

should not access data they should not have access to. This will need to be solved within a storage

device. There are some synergies with external key management.

DL: The storage community has to figure out how to work

with eBPF and make this standard something that a storage environment can take

advantage of and rely on.

SOS: Where do you see the future of eBPF?

MB: The vision is

that you can build eBPFs and it works everywhere. When we build new database systems and

integrate eBPFs into them, we then have embedded kernels that can be sent to

any NVMe device over the wire and be executed.

The cool part is that it can be anywhere on the path, so there becomes a

lot of interesting ways to build new architectures on top of this. And together

with the open system ecosystem we can create a body of accelerators in which we

can then fast track the build of these ecosystems. eBPF can put this into overdrive with use

cases outside the kernel.

DL: There may be

some other environments where computational storage is being evaluated, such as

web assembly.

JH: An eBPF run time is much easier to put into an SSD than

a web assembly run time.

MB: eBPF makes more sense – it is simpler to start and

build upon as it is not set in stone for one particular use case.

Eli Tiomkin (ET): Different

SSDs have different levels of constraints.

Every computational storage SSDs in production and even those in

development have very unique capabilities that are dependent on the workload

and application.

SOS: Any

final thoughts?

MB: At this point, technologies are coming together which

are going to change the industry in a way that we can redesign the storage systems

both with computational storage and how we manage security in NVMe devices for

these programs. We have the perfect

storm pulling things together. Exciting platforms can be built using open

standards specifications not previously available.

SOS:

Looking forward to this exciting future. Thanks to you all.

PIRL brings together software development leaders interested in learning about programming methodologies for persistent memories and sharing their experiences with others. This is a meeting for developer project leads on the front lines of persistent programming; not sales, marketing, or non-technical management.

PIRL brings together software development leaders interested in learning about programming methodologies for persistent memories and sharing their experiences with others. This is a meeting for developer project leads on the front lines of persistent programming; not sales, marketing, or non-technical management.